What Is GPU Computing?

Over the last several years, GPU computing has become more popular in high-performance computing environments. According to Intersect 360, a market research firm that follows the HPC market, 34 of the 50 most popular HPC application packages offer GPU support, including all of the top 15 HPC apps.

In this article, we’ll look at what is GPU computing, how does a GPU work in an HPC environment, and how TotalView debugger helps developers stay on top of all the challenges found in these complex applications.

What Is GPU Computing?

GPU computing is the use of graphical processing units (GPUs) as coprocessors to CPUs. This helps to increase the compute power in high-performance and complex computing environments.

GPUs have traditionally been used for simply improving the performance of graphics on computers. However, nowadays, we’re seeing GPUs used more and more for things other than graphics, like data.

Standard CPUs consist of four to eight cores – maxing out at 32, while GPUs can consist of hundreds to thousands of smaller cores. Working together in a massively parallel architecture, the two accelerate the rate at which information can be processed.

Back to topHow Does a GPU Work in HPC?

Many high-performance computing clusters are taking advantage of GPU computing by adding multiple GPUs per node. This allows teams to really increase what they're able to do for compute capacity through the GPUs.

However, by doing this, the complexity of the computing environment rises. GPUs works different than a normal CPU – the way they compute through data and represent threads and other concepts can create challenges for a developer like:

- Going back and forth through the code running on the CPU and GPU.

- Examining data as it is transferred between the two of them.

- Developing hybrid MPI and OpenMP applications utilizing GPUs.

- Debug multiple GPUs at a time when using cluster configured with multi-GPU nodes.

Having a capable debugger available can really help manage these challenges for developers. It’s critical to have one that can support multiple GPUs and the latest GPU development technologies such as CUDA from NVIDIA.

Back to topGPU Computing With TotalView

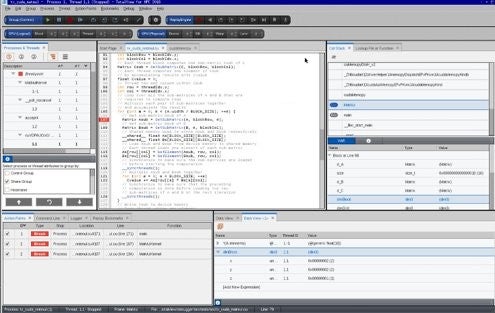

This is TotalView debugging a CUDA application in an NVIDIA GPU computing environment. In the central source area, you’re able to set breakpoints easily on your host code or your kernel code whenever – before you start running your program, during execution, and so forth.

Viewing Process and Thread States

As a GPU enabled application runs, code is run across the CPU and GPU and processed by hundreds to thousands of execution threads. Understand where code is running and which CPU or GPU kernel threads have hit breakpoints can be a challenge when there are a lot of threads. As code runs, the Process and Threads view in the left-hand portion of screenshot shows the state of the processes and threads running on both the CPU and GPUs in the application. Developers can easily navigate to any thread and view its state, data and control its execution.

To deal with scale, the Process and Threads view provides an aggregated display. It can be used to quickly understand everything running across a parallel job and even right down to the thread level of detail within GPUs. TotalView helps you aggregate that information so you can quickly find a state of your code, the state of the GPU, and be able to drill down and examine what's going on in a particular location.

Setting breakpoints in CPU or GPU code is something you want to be able to do easily and manage in the Action Points view. TotalView clearly identifies where those breakpoints are, and allows you to set, enable, or disable those.

Additionally, through TotalView’s Data View you can easily look at the data that's on the on a GPU, look at the variables, look at the arrays, look at how data is transferred from the host and to the GPU, examine managed memory variables and be able to make sure that it's all correct.

Support For GPU Computing

TotalView is up to date with the latest NVIDIA GPUs and CUDA SDKs and supports debugging CUDA applications on Linux x86-64, ARM64 and IBM Power9 (PowerLE) architectures. TotalView is able to support complex environments such as ones using different MPI based configurations that are using different multi GPU, or a hybrid environment of MPI and OpenMP.

Back to topTake Your GPU Computing to the Next Level

See how GPU computing and parallel programming is easier and faster with TotalView. Sign up for a free trial.

Additional Resources

- CUDA Debugging Support For Apps Using NVIDIA GPUs on ARM64

- How to Use the Data View

- How to Use the Processes and Threads View